Beyond Chatbots: The Rise of AI Agents in Business Workflows

Beyond Chatbots: The Rise of AI Agents in Business Workflows

The enterprise technology landscape is undergoing a fundamental architectural shift. For the past few years, the corporate world has been captivated by the novelty of generative AI. Businesses integrated chat interfaces into their internal portals, armed their teams with intelligent drafting tools, and deployed customer-facing bots capable of conversational fluency. However, while these tools accelerated content creation and knowledge retrieval, they fundamentally remained passive. They required human prompts, human evaluation, and human execution to bridge the gap between digital suggestions and real-world actions.

As we navigate the technological realities of the current year, the era of simple prompts and passive digital assistants is concluding. We are witnessing the “agentic leap,” a transition from systems that merely talk to systems that actively perform tasks. Autonomous AI agents represent the next evolution in business automation—software entities capable of perceiving their environment, reasoning through complex constraints, calling external tools, and executing multi-step workflows with minimal human intervention.

For business leaders, operations directors, and enterprise architects, deploying AI Agents for Business 2026 is no longer an experimental luxury; it is a baseline competitive requirement. Industry data indicates that organizations deploying agentic AI systems are projecting average returns on investment (ROI) exceeding 171%, driven by massive reductions in operational costs and unprecedented gains in workflow capacity. Furthermore, the global agentic AI market is experiencing an unprecedented compound annual growth rate, rapidly expanding into a multi-billion dollar sector. This exhaustive report breaks down the mechanics of agentic AI, explores transformative use cases across customer support, supply chain, and human resources, and details how modern digital workflows are architected for enterprise environments.

The Paradigm Shift: Generative AI Chatbots vs. Autonomous AI Agents

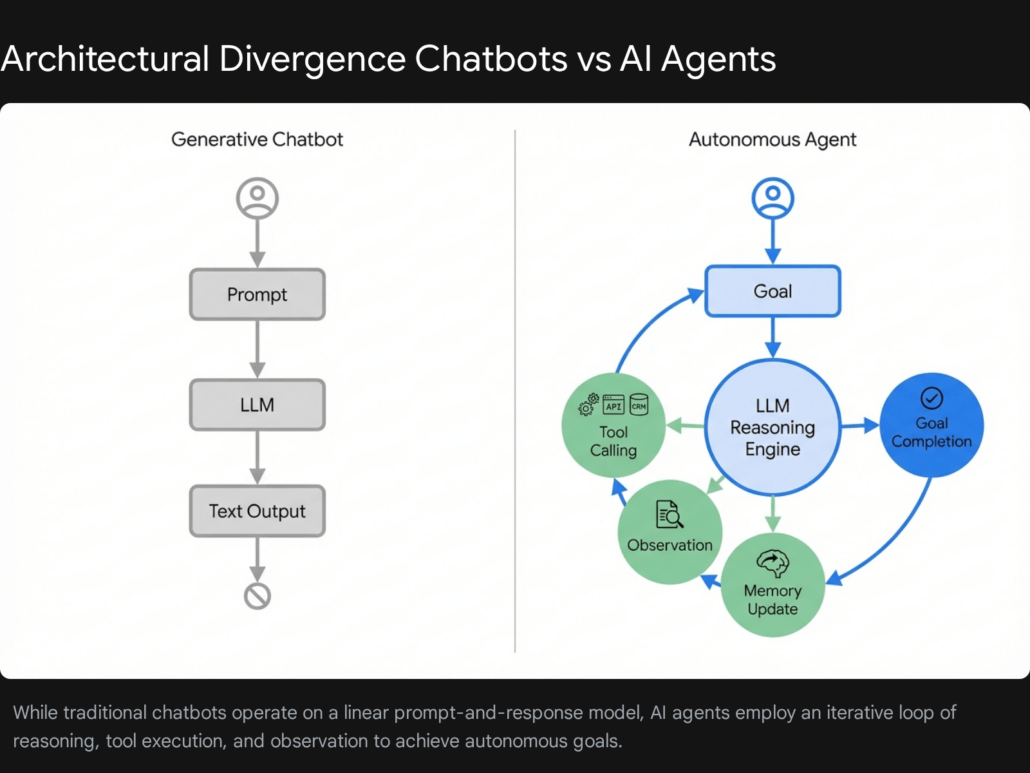

To fully grasp the business impact of agentic workflows, it is crucial to understand the technical and philosophical divergence between a generative AI chatbot and an autonomous AI agent. While both utilize Large Language Models (LLMs) as their foundational reasoning engines, their deployment architectures are vastly different.

A generative AI chatbot is a reactive system. Its primary function is to process an input prompt, search its training data or a connected vector database through Retrieval-Augmented Generation (RAG), and generate a text-based response. If a user asks a chatbot to “refund a customer,” the chatbot might provide a beautifully formatted, step-by-step guide on how the human agent can log into the payment gateway and process the refund. It stops abruptly at the boundary of conversation, offering zero operational leverage beyond information synthesis.

An AI agent, conversely, is a proactive, goal-driven system equipped with digital agency. When an agent is given the objective to “refund a customer,” it utilizes a cognitive framework—often the ReAct (Reason + Act) pattern—to achieve that goal autonomously. The agent will read the request, securely authenticate into the company’s Stripe or PayPal API, process the financial transaction, update the Zendesk support ticket, and send a confirmation email to the customer. It does not just outline the work; it completes the work.

This fundamental difference dictates technology stacks, corporate risk profiles, and business ROI.

Core Architectural Differences in Enterprise Deployments

| Capability Matrix | Generative AI Chatbot | Autonomous AI Agent |

| Operational Mode | Reactive: Waits for a human prompt to generate a single, isolated response. | Proactive: Pursues a defined goal across multiple independent steps and system interactions. |

| System Integration | Isolated: Primarily interacts with a text interface and static knowledge bases. | Integrated: Connects deeply with APIs, databases, and enterprise software (CRMs, ERPs, HRIS). |

| Reasoning Capacity | Linear: Processes a prompt and outputs the most statistically probable text based on training. | Iterative: Evaluates options, interprets tool outputs, adapts to errors, and adjusts plans dynamically. |

| Memory Management | Short-term: Retains context only within the immediate user chat session. | Long-term: Utilizes persistent memory databases to recall past interactions and user preferences indefinitely. |

| Execution Reality | Enhances human productivity by assisting with drafting, coding, and summarization. | Multiplies workforce capacity by executing end-to-end workflows autonomously, acting as a digital employee. |

The realization that an LLM can be used not just for generating text, but as a dynamic routing and reasoning engine to control external software, is the catalyst for the current enterprise AI boom. Organizations are moving away from measuring AI success by “hours saved drafting emails” to “percentage of core business processes fully automated.” At Tool1.app, we have observed firsthand how transitioning our clients from isolated AI novelties to fully integrated, agentic operating models creates immediate, measurable impacts on their bottom line.

The ROI Awakening: Why Agentic AI is Dominating 2026 Budgets

The shift toward AI Agents for Business 2026 is driven by hard financial metrics, not speculative technology trends. Corporate leaders are demanding transformative value from their AI investments. Early deployments of generative AI provided generalized productivity boosts—efficiency gains here, capacity growth there—but they rarely added up to wholesale business transformation.

Agentic AI changes this equation by targeting the fundamental cost structures of enterprise operations. Market intelligence reveals that the sector is expanding at an astonishing compound annual growth rate of over 43&percent;. U.S. enterprises deploying agentic workflows are recording returns of up to 192&percent;, significantly outpacing traditional software automation.

These exceptional returns are derived from the unique scalability of agents. Traditional robotic process automation (RPA) breaks the moment a user interface changes or an unexpected data format is introduced. Agentic AI, powered by the semantic understanding of LLMs, is resilient. If an API returns an unexpected error, the agent can read the error message, reason through the problem, search for an alternative endpoint, and successfully complete the task—all without human oversight. This resilience drastically reduces the maintenance overhead that plagues legacy automation systems, allowing businesses to scale their operations with non-linear cost structures.

Transformative Business Use Cases

The integration of agentic AI is highly concentrated in operational bottlenecks where speed, accuracy, and cross-system coordination are paramount. The following sections explore the top three enterprise domains where multi-agent workflows are actively redefining business standards.

1. Autonomous Customer Support and Service Resolution

Customer support was one of the earliest adopters of standard AI, but early deployments often resulted in frustrated users trapped in infinite loops of rule-based dialogue. Customers who needed real help were met with digital gatekeepers that could only deflect tickets, never resolve them.

Today’s autonomous customer support agents go far beyond ticket deflection—they provide complete ticket resolution. A true AI agent uses tools (APIs) to execute multi-step tasks across a company’s entire technology stack. When a customer initiates a complex request, the agentic system performs an autonomous sequence of actions that mimics a highly trained human representative.

Consider a scenario where a SaaS user reports a critical software crash:

- Context Ingestion: The agent instantly identifies the user, pulling their subscription tier from Stripe, their purchase history from Salesforce, and recent interactions from Zendesk.

- Intent and Constraint Analysis: The agent determines the user’s specific technical issue. It cross-references this exact error code with active incident reports in engineering tools like Jira or Datadog.

- Execution via Tool Calling: Recognizing a known bug affecting premium users, the agent links the user’s support ticket directly to the engineering Jira board. It then interfaces with the billing API to apply a prorated credit to their account as an apology, and generates a highly personalized response detailing the expected resolution time based on the engineering team’s SLA.

- Human Escalation (The Guardrail): If the request involves a high-value enterprise client threatening churn, the agent detects the negative sentiment and routes a summarized, prioritized brief to a human Customer Success Manager via Slack or Microsoft Teams.

This level of orchestration reduces Tier-1 support costs dramatically, with some enterprises reporting up to an 80&percent; reduction in operational overhead. More importantly, it slashes resolution times from hours to seconds. The bottleneck in customer support is no longer the intelligence of the LLM; it is the robustness of the API integrations.

2. Automated Supply Chain Management and Logistics

Supply chains have historically operated on static weekly or monthly planning cycles. You plan on Monday, execute through Friday, and review the inevitable disruptions on Sunday. However, global logistics is a chaotic environment defined by minute-by-minute volatility. In 2026, AI agents in supply chain management are evolving into dynamic, multi-agent ecosystems that continuously optimize operations in real-time.

Consider a highly realistic disruption scenario: a major international port unexpectedly shuts down due to an industrial strike or extreme weather at 3:00 PM. In a traditional enterprise, this triggers a cascade of panicked emails, emergency meetings, and manual spreadsheet calculations to determine the downstream impact. By the time human planners agree on a solution, alternative routes are already booked by faster competitors.

An autonomous supply chain AI ecosystem reacts within milliseconds, utilizing specialized agents working in concert:

- The Intelligence Agent continuously monitors unstructured global data feeds, weather patterns, and news sentiment. It detects the port closure immediately and flags the systemic risk.

- The Logistics Agent calculates the exact inventory currently en route to that specific port. It automatically runs complex simulations utilizing digital twins of the supply chain to find the optimal alternative ports, factoring in real-time fuel costs, berthing delays, and overland transport fees.

- The Procurement Agent assesses the delay’s impact on manufacturing hubs awaiting those raw materials. It autonomously reaches out to secondary, pre-approved local suppliers, negotiating lead times via email and placing temporary bridging orders to ensure assembly lines do not halt.

- The Orchestration Agent finalizes the new routes, updates the central ERP system (e.g., SAP or Oracle), issues rerouting commands to ocean carriers, and alerts human executives of the mitigated financial impact.

Supply Chain Modernization ROI

| Metric | Traditional Supply Chain | Agentic Supply Chain Optimization | Business Impact |

| Decision Latency | Days to Weeks | Milliseconds to Seconds | Captures alternative routes before competitors. |

| Inventory Levels | High buffer stock required | Dynamically balanced | Up to 35&percent; reduction in carrying costs. |

| Logistics Costs | Static routing | Continuous optimization | Up to 15&percent; reduction in freight and fuel spend. |

| Disruption Response | Reactive and manual | Predictive and autonomous | Prevents manufacturing downtime and stockouts. |

This is the power of a “Multi-Agent System.” No single AI model handles the entire global supply chain. Instead, distinct agents with specific roles, system permissions, and guardrails negotiate and collaborate, creating an agile, self-optimizing network that turns supply chain resilience into a competitive moat.

3. HR Scheduling, Onboarding, and Internal Operations

Human Resources teams are frequently bogged down by high-volume, repetitive administrative requests. Employees constantly ask about PTO policies, request IT equipment, need access to software tools, and submit complex paperwork. Each of these interactions pulls HR professionals away from strategic initiatives like talent development, culture building, and retention planning. Agentic AI reclaims this lost capacity by autonomously executing multi-step internal workflows.

Employee onboarding is a prime example of a process ripe for agentic automation. Historically, when a candidate signs an offer letter, it triggers a fractured, manual process spanning multiple departments. HR downloads a PDF, manually types data into a Human Resources Information System (HRIS) like Workday, emails IT to provision a laptop, emails security for badge access, and manually coordinates calendar blocks for orientation.

An HR AI Agent transforms this into a seamless, instantaneous workflow focused on outcomes rather than tasks:

- Upon detecting the digital signature via a webhook, the AI agent autonomously extracts the candidate’s core data (Start Date, Role, Department, Salary).

- It interacts with the Workday API to create the official employee profile without manual data entry.

- It uses the ServiceNow API to generate a hardware provisioning ticket for IT, specifically requesting the correct laptop model based on the new hire’s role (e.g., a high-performance machine with specific IDEs for a software engineer).

- It interfaces with Azure Active Directory to provision email addresses, Slack accounts, and specific software licenses.

- It accesses Google Workspace or Microsoft 365 to scan the calendars of the hiring manager, the IT department, and the HR staff, autonomously scheduling mutually available orientation sessions and sending the invites.

Through seamless enterprise system integrations, these agents provide a 24/7 self-service layer for all employees. Whether an employee is asking about a complex parental leave policy, submitting a PTO request, or enrolling in benefits during open enrollment, the agent acts as an autonomous digital HR assistant. This eliminates the response overhead that keeps HR teams stuck answering the same questions, allowing the department to focus entirely on human-centric strategy.

The Technical Foundation: The Model Context Protocol (MCP)

To build these highly integrated, action-oriented systems, the AI industry required a standardized method for models to communicate with disparate enterprise software. Before 2026, developers had to write brittle, bespoke API connectors for every single tool an AI agent needed to use. If an agent needed to talk to Jira, Salesforce, and a local SQL database, it required three separate, highly specific integration paradigms. This resulted in a tangled web of connectors, duplicated efforts, and severe security vulnerabilities known as “shadow AI.”

The solution that has rapidly become the foundational building block for Agentic AI is the Model Context Protocol (MCP).

Championed as an open-source standard, MCP acts as a universal adapter. It defines a single, secure protocol for how AI applications communicate with any external data source or software application. Instead of hard-coding complex integrations into the LLM’s prompt logic, organizations deploy MCP servers that sit in front of their enterprise tools. The AI agent, acting as an MCP client, can instantly discover and utilize the tools exposed by the server.

This dramatically reduces development complexity and accelerates time-to-value. Furthermore, it centralizes enterprise governance. IT departments can enforce strict access controls at the MCP server level, ensuring that an AI agent cannot access highly classified financial data or execute destructive database commands unless explicitly permitted by the protocol’s security layer. As an enterprise upgrades its core software—switching from one CRM to another, for instance—they simply deploy a new MCP server. The AI agent seamlessly adapts to the new tools without requiring months of costly redevelopment.

How Tool1.app Builds Custom Agents Using Python and LLMs

The conceptual promise of agentic AI is undeniable, but the execution requires rigorous software engineering. When the engineering team at Tool1.app architects these multi-agent ecosystems for our clients, we rely on a modern, robust technology stack centered around Python, high-tier reasoning LLMs, and advanced orchestration frameworks.

Building a production-ready AI agent requires moving far beyond a simple API call. It requires constructing a persistent cognitive loop equipped with memory, secure tool access, and strict guardrails.

1. Defining the Agent Architecture and ReAct Pattern

We build upon the ReAct (Reason and Act) architectural pattern. In this framework, the LLM is instructed to think iteratively. It receives a user query, formulates a logical thought process, selects an appropriate tool from its provided toolkit, executes the tool, observes the result, and repeats the process until the final goal is met.

For complex workflows requiring absolute state management and auditability, Tool1.app utilizes graph-based orchestration frameworks. Unlike standard linear chains, these frameworks allow developers to model agent workflows as directed acyclic graphs (DAGs). This means we can create highly controlled loops, maintain state across long-running asynchronous tasks, and build critical “human-in-the-loop” checkpoints where the agent pauses and waits for a manager’s approval before executing a sensitive action, such as wiring funds or sending a mass email.

https://1yqpzwailru279ocue49nzdjx27c0xhsmm1h9a034vm9tkrh0z-h868144788.scf.usercontent.goog/gemini-code-immersive/shim.html?origin=https%3A%2F%2Fgemini.google.com&cache=1

2. The Power Layer: Custom Tool Implementation in Python

An agent’s utility is entirely dependent on its tools. In Python, tools are essentially discrete functions wrapped in descriptive metadata. The LLM reads this metadata to understand exactly what the tool does, when it should be used, and what arguments it requires to execute successfully.

Consider a practical implementation example of how Tool1.app might build a specialized tool for an HR agent to query a proprietary employee database for Paid Time Off (PTO) balances:

Python

import os

import sqlite3

import json

from langchain.tools import tool

from langchain.agents import initialize_agent, AgentType

from langchain_openai import ChatOpenAI

# 1. Define the specific, actionable tool with clear metadata

@tool

def lookup_employee_pto(employee_id: str) -> str:

"""

Queries the secure HR database to return the available Paid Time Off (PTO)

balance for a given employee ID. Use this tool strictly when a user

asks about their vacation days or leave balance.

"""

try:

# Secure database connection (simulated for demonstration)

conn = sqlite3.connect('enterprise_hr_secure.db')

cursor = conn.cursor()

# Parameterized query to prevent SQL injection

cursor.execute("SELECT pto_balance FROM employees WHERE emp_id=?", (employee_id,))

result = cursor.fetchone()

conn.close()

if result:

return json.dumps({"status": "success", "employee_id": employee_id, "pto_days": result})

return json.dumps({"status": "error", "message": f"Could not locate employee ID: {employee_id}"})

except Exception as e:

return json.dumps({"status": "critical_error", "message": str(e)})

# 2. Initialize the reasoning engine (LLM)

# Temperature is set to 0.0 to ensure deterministic, precise execution over creativity

llm = ChatOpenAI(

model_name="gpt-4o",

temperature=0.0,

api_key=os.getenv("OPENAI_API_KEY")

)

# 3. Compile the enterprise toolkit

enterprise_tools = [lookup_employee_pto]

# 4. Initialize the Agent orchestration with strict parameters

hr_agent = initialize_agent(

tools=enterprise_tools,

llm=llm,

agent=AgentType.OPENAI_FUNCTIONS,

verbose=True # Allows developers to observe the ReAct loop in the console

)

# Example Execution:

# hr_agent.run("Can you check how many vacation days employee ID 8472 has left?")

In the architecture above, the LLM evaluates the user’s natural language request. It realizes it cannot answer the question from its pre-training because the data is proprietary. It reads the semantic description of the lookup_employee_pto tool, accurately extracts the employee_id from the natural language prompt, and executes the Python function. It then ingests the structured JSON output from the database and formats a conversational, empathetic response for the employee.

3. Memory and State Management

Traditional chatbots suffer from digital amnesia; AI agents do not. For an agent to be useful in a business context, it must remember user preferences, past actions, and overarching business constraints.

- Short-Term Context: We utilize in-memory buffers so the agent remembers the context of the current multi-step transaction. If a user asks to book a flight and then says “change it to Tuesday,” the agent understands what “it” refers to.

- Long-Term Memory: For enterprise deployments, we implement vector databases (like Pinecone, Weaviate, or Redis) to store historical user interactions, organizational policies, and specific user preferences safely. Before the agent acts, it queries this vector space to retrieve highly relevant context, ensuring its actions are aligned with specific corporate guidelines.

4. Embedding Governance, Security, and Guardrails

The most critical aspect of deploying AI agents in a business environment is safety. An autonomous system connected to production databases must be constrained. A rogue agent stuck in an infinite loop could rack up thousands of dollars in API costs in minutes, or worse, alter secure records improperly. At Tool1.app, we implement robust governance directly into the codebase:

- Semantic Guardrails: Validating the output of the LLM against expected JSON schemas before any external action is executed.

- Read-Only Defaults: Ensuring agents operate with the principle of least privilege. An agent built for financial data analysis is given “read-only” database tools, physically preventing it from altering or deleting records.

- Circuit Breakers and Rate Limits: Establishing hard programmatic limits on the number of API calls an agent can make within a single execution loop to prevent runaway costs or infinite recursive loops.

5. The Future is Multi-Agent Orchestration

As we look toward the remainder of 2026 and beyond, single-agent architectures are making way for multi-agent orchestration. Instead of building one massive, omnipotent agent that tries to do everything (and often hallucinates), developers are building specialized micro-agents that collaborate.

Imagine deploying an entire digital marketing department autonomously. A “Research Agent” scours the web and internal databases for trending industry topics. It passes its structured findings to a “Writer Agent” that drafts a comprehensive blog post. The draft is seamlessly routed to a “Critic Agent,” which evaluates the text strictly against the company’s brand voice guidelines and SEO requirements. The Writer Agent revises the text based on the Critic’s feedback, and finally, a “Deployment Agent” pushes the polished code to a WordPress CMS via API.

This level of collaborative automation is not science fiction; it is the current standard of high-end software development. The businesses that embrace this capability will decouple their operational growth from their human headcount, achieving scale, consistency, and efficiency that competitors relying on manual labor simply cannot match.

Conclusion: Upgrading Your Digital Workforce

The evolution from prompt-based generative chatbots to autonomous, agentic workflows is the defining technological shift of this decade. AI Agents for Business 2026 represent a fundamentally new operating model. Organizations that successfully implement these systems are not merely cutting incremental costs; they are creating agile, error-resistant, and infinitely scalable operations. They are turning static software into active digital employees capable of handling everything from volatile supply chain disruptions to seamless HR onboarding and complex customer resolution.

However, realizing the highly touted 171&percent; ROI requires more than just API keys and basic scripts. It demands rigorous system architecture, custom tool development, strict security guardrails, and deep expertise in LLM orchestration and Python development. Navigating this transition requires a specialized engineering partner who understands both the profound technical complexity and the overarching business objectives.

Ready to upgrade your workforce? Contact us to build your first autonomous AI agent.

If your organization is ready to move beyond experimental chatbots and implement secure, revenue-driving automation, we are here to help. As experts in mobile/web applications, custom websites, Python automations, and advanced AI/LLM solutions, Tool1.app is perfectly positioned to architect your digital transformation. Visit Tool1.app today to schedule a consultation, and let our engineering team build the autonomous agents that will power your business efficiency into the future.